Art or Not: Creative artificial intelligence

by Dilpreet Singh

In the past, artworks and artefacts were confined to museums and the pages of books. With many museums digitising their collection works are now readily available to view by anyone with an Internet connection and digital device.

Art or Not, an A.I. powered app created by SensiLab (a research lab based at Monash University), takes advantage of this allowing users to visually explore the world around them finding online artworks that correspond to personal photos.

Experimenta spoke to Dilpreet Singh, SensiLab’s lead app developer, about Art or Not – how it works, and how it can help us discover art and harness creativity.

What does the Art or Not app do?

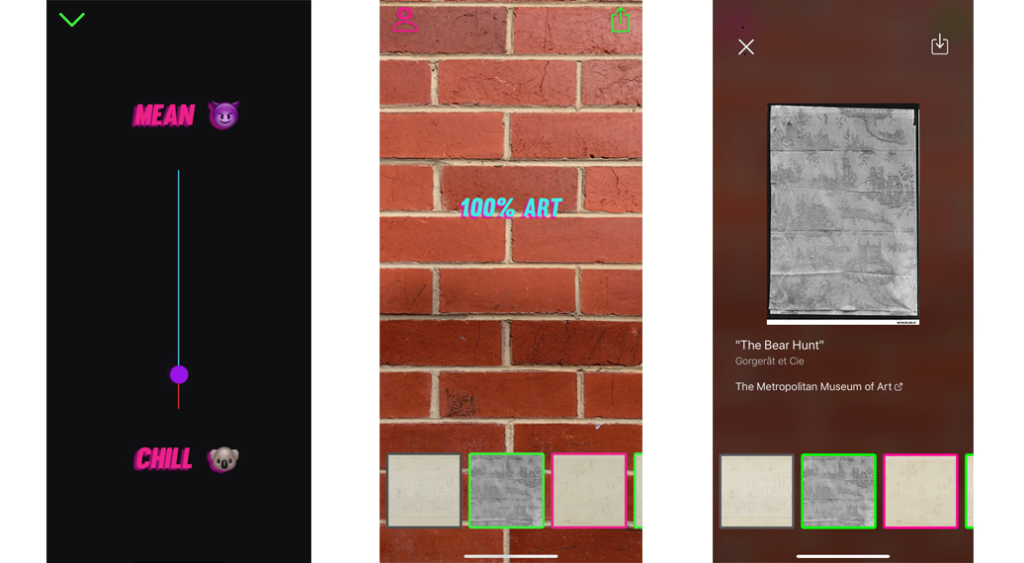

The app is your personal art critic that helps you figure out whether things around you are ‘art’ and what is not ‘art’. You open the app, capture an image of anything, and the app will let you know the outcome. It relies purely on sight to make its decision as it doesn’t have the philosophical understanding of art, so it may not be the most thorough critic.

The app is also a very useful companion as it can pull up similar looking artworks to what you capture. For example, taking a photo of a person can pull up Renaissance portraits, and capturing a cup can show you pictures of historic sculptures. Art or Not lets you use the world around you as a way of discovering art.

How does the app decide what constitutes an artwork?

The app uses a convolutional neural network to break down images into a handful of features (350) that allow it to ‘describe’ an image. With this ability, we then trained the network on over 100,000 artworks (sculptures, Renaissance, abstract, etc.) to give it an understanding of what an ‘artwork’ looks like. When a new image is captured, the app uses the same convolutional neural network to breakdown the image and then figures out whether it falls in the vicinity of other artworks that it has seen before. If this is the case, the artwork is deemed as ‘art’, or not ‘art’.

See this blog post for further technical details: http://ai.sensilab.monash.edu/2018/09/17/similarity-search-engine/

When the app is opened a sliding dial with ‘Mean’ and ‘Chill’ appears at both ends. Can you please explain how the users’ choice – that is if the dial is closer to ‘Mean’ or ‘Chill’ – modifies what is ‘Art or Not’?

Moving the slider between these options lets you personalise the critic. A ‘mean’ critic is stricter in terms of what it considers to be art — it expects a higher degree of similarity between art that it knows about vs the image you’ve captured for it to deem it art. The ‘chill’ critic is, as the name suggests, a bit more forgiving. It was important to have some kind of personalisation as the app should feel like your personal art critic, rather than the canonical critic.

Is there are particular digitalized art gallery from where the images used to make this app are sourced?

The artworks are sourced from the digitised public domain collections of The Metropolitan Museum of Art and The Art Institute of Chicago. In total, there are ~120,000 artworks in the app’s repository.

It appears that this app is about personal self-discovery as well as harnessing creativity. What are your thoughts on this?

To me, the app is more a tool for discovery. The art critic side of the app is novel and fun, but I’m much more interested in the similar artworks the app returns back. It’s almost a window into the past, allowing me to find and discover photographs and paintings eerily alike to the world around me, providing a way for me to relate to that particular artwork at a level I could never before. The artwork becomes more than just a historical artefact.

A workshop, Creative Artificial Intelligence: Boosting your Artistic Ability was led by Dilpreet Singh and Lizzie Crouch on 12 June 2017 at Latrobe Regional Gallery in Morwell, Victoria as part of Experimenta Make Sense.

SensiLab is a technology-driven, design-focused research lab based at Monash University in Melbourne, Australia. Through their research, the lab they explore the creative possibilities of technology – how it changes us and how we can harness it. http://sensilab.monash.edu/

Dilpreet Singh is SensiLab’s lead app developer, creating applications for a diverse range of research projects within the group. He particularly enjoys working with digital imaging and has collaborated on a number of projects in the field of computer-vision using both traditional machine vision and deep learning techniques. He is also keen on exploring the creative applications of computational photography. He graduated with Honours in Computer Science from Monash University, where his research project explored the synergy of health, fitness, and machine learning.